Appears in collection : Learning and Optimization in Luminy - LOL2022 / Apprentissage et Optimisation à Luminy - LOL2022

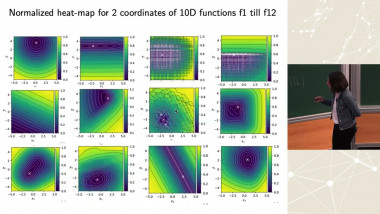

We present a list of counterexamples to conjectures in smooth convex coercive optimization. We will detail two extensions of the gradient descent method, of interest in machine learning: gradient descent with exact line search, and Bregman descent (also known as mirror descent). We show that both are non convergent in general. These examples are based on general smooth convex interpolation results. Given a decreasing sequence of convex compact sets in the plane, whose boundaries are Ck curves (k ¿ 1, arbitrary) with positive curvature, there exists a Ck convex function for which each set of the sequence is a sublevel set. The talk will provide proof arguments for this results and detail how it can be used to construct the anounced counterexamples.