Information-Theoretic Lower Bounds for Distributed Function Computation

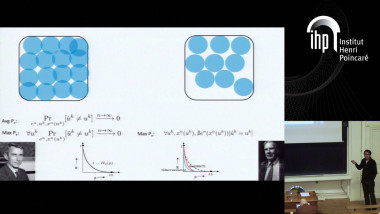

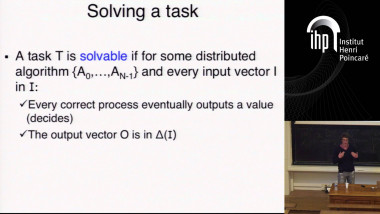

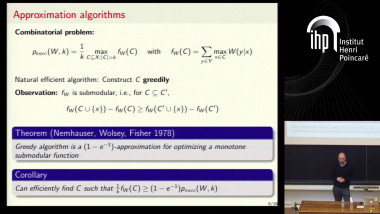

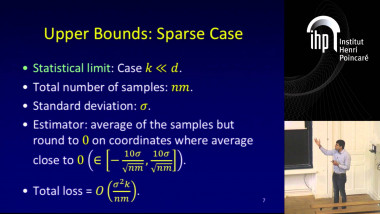

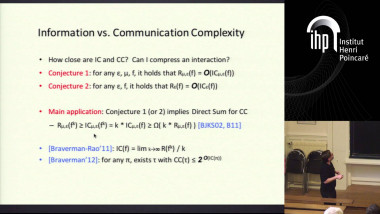

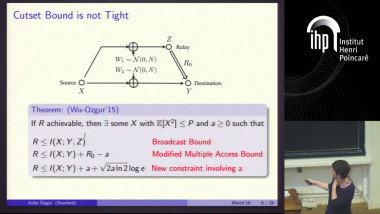

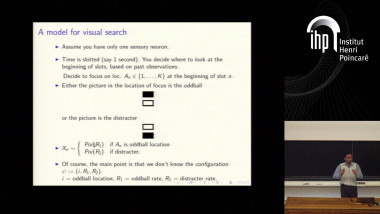

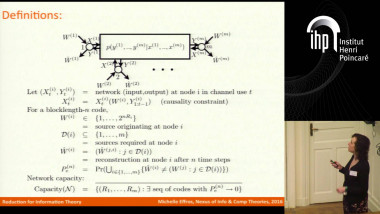

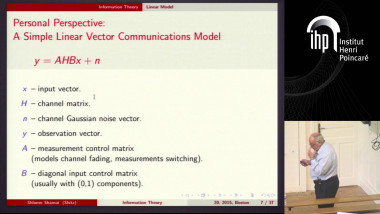

In this talk, based on joint work with Aolin Xu, I will describe a general information-theoretic methodology for deriving lower bounds on the minimum time required by any scheme for distributed function computation over a network of point-to-point channels with finite capacity to achieve a given accuracy with a given probability. The main ingredients are: A lower bound on conditional mutual information via so-called small ball probabilities, which captures the influence on the computation time of the joint distribution of the initial observations at the nodes, the structure of the function, and the desired level of accuracy. An upper bound on conditional mutual information via strong data processing inequalities, which complements and strengthens the usual cutset-capacity upper bounds. A multi-cutset analysis that quantifies the dissipation of information as it flows across a succession of cutsets in the network. This analysis is based on reducing a general network to a bidirected chain, and the results highlight the dependence of the computation time on the diameter of the network, a fundamental parameter that has been missing from most of the existing results on distributed computation over networks of noisy channels.