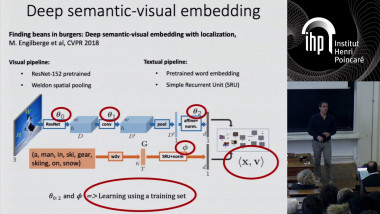

Designing multimodal deep architectures for Visual Question Answering

Multimodal representation learning for text and image has been extensively studied in recent years. Currently, one of the most popular tasks in this field is Visual Question Answering (VQA). I will introduce this complex multimodal task, which aims at answering a question about an image. To solve this problem, visual and textual deep nets models are required and, high level interactions between these two modalities have to be carefully designed into the model in order to provide the right answer. This projection from the unimodal spaces to a multimodal one is supposed to extract and model the relevant correlations between the two spaces. Besides, the model must have the ability to understand the full scene, focus its attention on the relevant visual regions and discard the useless information regarding the question.