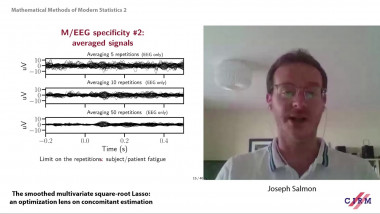

The smoothed multivariate square-root Lasso: an optimization lens on concomitant estimation

In high dimensional sparse regression, pivotal estimators are estimators for which the optimal regularization parameter is independent of the noise level. The canonical pivotal estimator is the square-root Lasso, formulated along with its derivatives as a "non-smooth + non-smooth'' optimization problem. Modern techniques to solve these include smoothing the datafitting term, to benefit from fast efficient proximal algorithms. In this work we focus on minimax sup-norm convergence rates for non smoothed and smoothed, single task and multitask square-root Lasso-type estimators. We also provide some guidelines on how to set the smoothing hyperparameter, and illustrate on synthetic data the interest of such guidelines. This is joint work with Quentin Bertrand (INRIA), Mathurin Massias, Olivier Fercoq and Alexandre Gramfort.