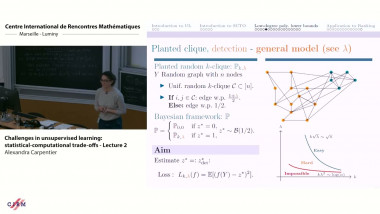

Challenges in unsupervised learning: statistical-computational trade-offs - Lecture 2

By Alexandra Carpentier

Appears in collection : New challenges in high-dimensional statistics / Statistique mathématique 2025

Unsupervised learning is a central challenge in artificial intelligence, lying at the intersection of statistics and machine learning. The goal is to uncover patterns in unlabelled data by designing learning algorithms that are both computationally efficient that is, run in polynomial time and statistically effective, meaning they minimize a relevant error criterion. Over the past decade, significant progress has been made in understanding statisticalcomputational trade-offs: for certain canonical « vanilla » problems, it is now widely believed that no algorithm can achieve both statistical optimality and computational efficiency. However, somewhat surprisingly, many extensions of these widely accepted conjectures to slightly modified models have recently been proven false. These variations introduce additional structure that can be exploited to bypass the presumed limitations. In these talks, I will begin by presenting a vanilla problem for which a statistical computational trade-off is strongly conjectured. I will then discuss a specific class of more complex unsupervised learning problems namely, ranking problems in which extensions of the standard conjectures have been refuted, and I will aim to explain the underlying reasons why.