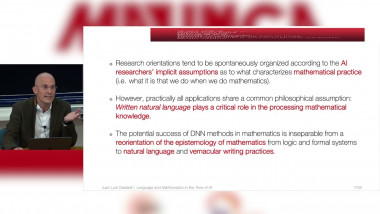

Language and Mathematics in the Time of AI. Philosophical and Theoretical Perspectives

By Juan Luis Gastaldi

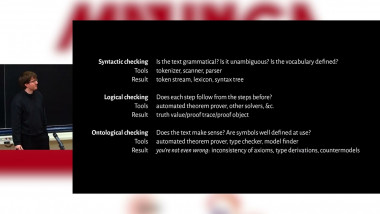

Towards autoformalization of textbook mathematics with natural proof checking

By De Lon Adrian

Appears in collection : Mathematics for and by Large Language Models

Large language models (LLMs) are trained in a very simple way. Lots of properties we assign to them are already present in the training data. In this talk we will review how LLMs are trained today, what are new training paradigms that are aiming at grounding those LLMs in the impact of those generations. In the context of code generation, this is for instance groudning the LLM with the feedback of executing its generated code. For Lean proofstep prediction we can use tactics execution feedback similarly. We believe closing the loop between “open” generation and “grouding” with more formal system can bridge the gap between informal and formal LLM usages.