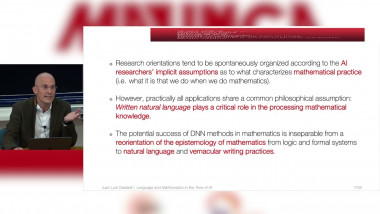

Language and Mathematics in the Time of AI. Philosophical and Theoretical Perspectives

By Juan Luis Gastaldi

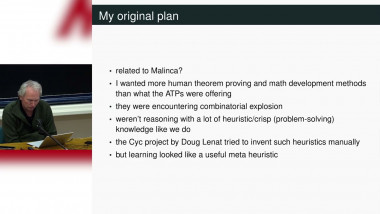

Some remarks about machine learning and (un)natural proving

By Josef Urban

Appears in collection : Statistics and Machine Learning at Paris-Saclay (2023 Edition)

In this presentation, I will present some results on optimization in the context of federated learning with compression. I will first summarise the main challenges and the type of results the community has obtained, and dive into some more recent results on tradeoffs between convergence and compression rates, and user-heterogeneity. In particular, I will describe two fundamental phenomenons (and related proof techniques): (1) how user-heterogeneity affects the convergence of federated optimization methods in the presence of communication constraints, and (2) the robustness of distributed stochastic algorithms to perturbation of the iterates, and the link with model compression. I will then introduce and discuss a new compression scheme based on random codebooks and unitary invariant distributions.