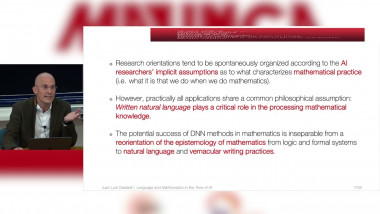

Language and Mathematics in the Time of AI. Philosophical and Theoretical Perspectives

By Juan Luis Gastaldi

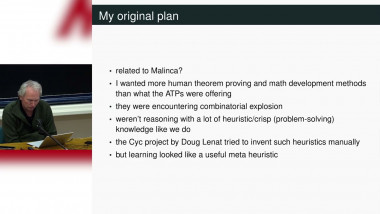

Some remarks about machine learning and (un)natural proving

By Josef Urban

Appears in collection : CEMRACS 2023: Scientific Machine Learning / CEMRACS 2023: Apprentissage automatique scientifique

High-fidelity numerical simulation of physical systems modeled by time-dependent partial differential equations (PDEs) has been at the center of many technological advances in the last century. However, for engineering applications such as design, control, optimization, data assimilation, and uncertainty quantification, which require repeated model evaluation over a potentially large number of parameters, or initial conditions, these simulations remain prohibitively expensive, even with state-of-art PDE solvers. The necessity of reducing the overall cost for such downstream applications has led to the development of surrogate models, which captures the core behavior of the target system but at a fraction of the cost. In this context, new advances in machine learning provide a new path for developing surrogates models, particularly when the PDEs are not known and the system is advection-dominated. In a nutshell, we seek to find a data-driven latent representation of the state of the system, and then learn the latent-space dynamics. This allows us to compress the information, and evolve in compressed form, therefore, accelerating the models. In this series of lectures, I will present recent advances in two fronts: deterministic and probabilistic modeling latent representations. In particular, I will introduce the notions of hyper-networks, a neural network that outputs another neural network, and diffusion models, a framework that allows us to represent probability distributions of trajectories directly. I will provide the foundation for such methodologies, how they can be adapted to scientific computing, and which physical properties they need to satisfy. Finally, I will provide several examples of applications to scientific computing.