Adaptive rates for trend filtering using dual certificates - Lecture 1

The theory for trend filtering has been developed in a series of papers: Tibshirani [2014], Wang et al. [2016], Sadhanala and Tibshirani [2019], Sadhanala et al. [2017], Guntuboyina et al. [2020], and see Tibshirani [2020] for a very good overview and further references. In this course we will combine the approach of Dalalyan et al. [2017] with dual certificates as given in Candes and Plan [2011]. This allows us to obtain new results and extensions.

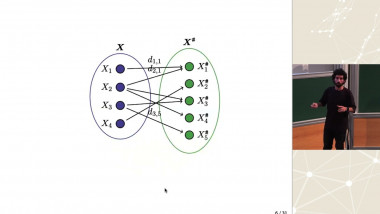

The trend filtering problem in one dimension is as follows. Let $Y\sim \mathcal{N}(f^{0},\ I)$ be a vector of observations with unknown mean $f^{0} :=\mathrm{E}Y$. Let for $f\in \mathbb{R}^{n},$ $$ (\triangle f)_{i}\ :=\ f_{i}-f_{i-1},\ i\geq 2, $$ $$ (\triangle^{2}f)_{i}\ :=\ f_{i}-2f_{i-1}+f_{i-2},\ i\geq 3, $$ $$ (\triangle^{k}f)_{i}\ :=\ (\triangle(\triangle^{k-1}f))_{i},\ i\geq k+1. $$ Then we consider the estimator $$ \hat{f}\ :=f\min_{\in \mathbb{R}^{n}}\{\Vert Y-f\Vert_{2}^{2}/n+2\lambda\Vert\triangle^{k}f\Vert_{1}}. $$ Let $S_{0} :=\{j\ :\ (\triangle^{k}f^{0})_{j}\neq 0}$ and $s_{0} :=|S_{0}|$ its size. We want to prove a "oracle'' type of result: for an appropriate choice of the tuning parameter, and modulo $\log$-factors, with high probability $\Vert\hat{f}-f^{0}\Vert_{2}^{2}/n\lesssim(s_{0}+1)/n$. For this one may apply the general theory for the Lasso. Indeed, the above is a Lasso estimator if we write $f=\Psi b$ where $\Psi\in \mathbb{R}^{n\times n}$ is the "design matrix'' or "dictionary'' and where $b_{j}=(\triangle^{k}f)_{j}, j\geq k+1$. We present the explicit expression for this dictionary and then will notice that the restricted eigenvalue conditions that are typically imposed for Lasso problems do not hold. What we will do instead is use a "dual certificate'' $q$ with index set $\mathcal{D} :=\{k+1,\ .\ .\ .\ ,\ n}$. We require that $q_{j}=\mathrm{s}\mathrm{i}\mathrm{g}\mathrm{n}(\triangle^{k}f^{0})_{j}$ if $j\in S_{0}$ and such that $|q_{j}|\leq 1-w_{j}$ if $j\in \mathcal{D}\backslash S_{0}$, where $\{w}_{j\in D\backslash S_{0}}$ is a given set of noise weights. Moreover, we require $q_{k+1}=q_{n}=0.$ We call such $q$ an interpolating vector. We show for an appropriate choice of $\lambda$ $$ \Vert\hat{f}-f^{0}\Vert_{2}^{2}/n<\sim(s_{0}+1)/n+n\lambda^{2}\Vert\triangle^{k}q\Vert_{2}^{2} $$ and that the two terms on the right hand side can be up to $\log$ terms of the same order if the elements in $S_{0}$ corresponding to different signs satisfy a minimal distance condition.

We develop a non-asymptotic theory for the problem and refine the above to sharp oracle results. Moreover, the approach we use allows extensions to higher dimensions and to total variation on graphs. For the case of graphs with cycles the main issue is to determine the noise weights, which can be done by counting the number of times an edge is used when traveling from one node to another. Extensions to other loss functions will be considered as well.