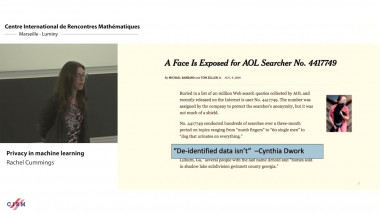

Privacy in machine learning

Privacy concerns are becoming a major obstacle to using data in the way that we want. It's often unclear how current regulations should translate into technology, and the changing legal landscape surrounding privacy can cause valuable data to go unused. How can data scientists make use of potentially sensitive data, while providing rigorous privacy guarantees to the individuals who provided data? A growing literature on differential privacy has emerged in the last decade to address some of these concerns. Differential privacy is a parameterized notion of database privacy that gives a mathematically rigorous worst-case bound on the maximum amount of information that can be learned about any one individual's data from the output of a computation. Differential privacy ensures that if a single entry in the database were to be changed, then the algorithm would still have approximately the same distribution over outputs. In this talk, we will see the definition and properties of differential privacy; survey a theoretical toolbox of differentially private algorithms that come with a strong accuracy guarantee; and discuss recent applications of differential privacy in major technology companies and government organizations.