When your big data seems too small: accurate inferences beyond the empirical distribution 2/2

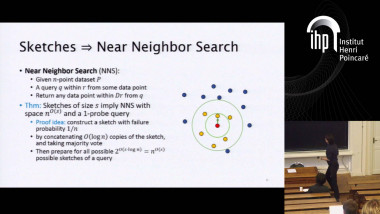

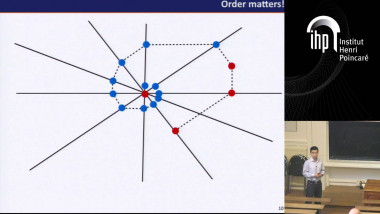

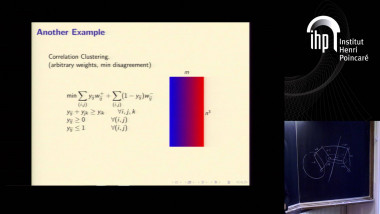

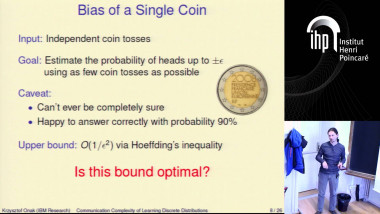

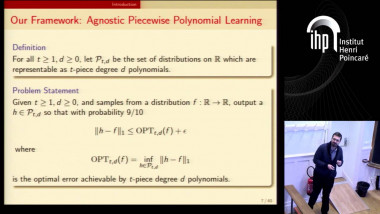

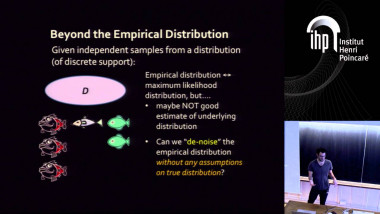

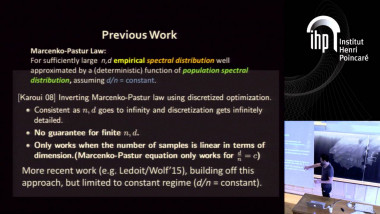

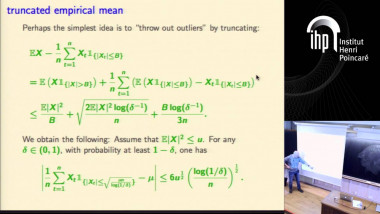

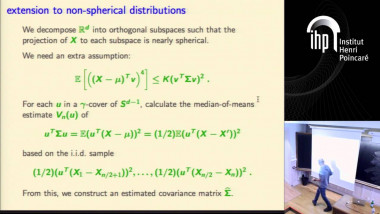

We discuss three problems related to the general challenge of making accurate inferences about a complex distribution, in the regime in which the amount of data (i. e the sample size) is too small for the empirical distribution of the samples to be an accurate representation of the underlying distribution. The first problem we consider is the following basic learning task: given independent draws from an unknown distribution over a discrete support, output an approximation of the distribution that is as accurate as possible in L1 distance (ie total variation distance). Perhaps surprisingly, it is often possible to “de-noise” the empirical distribution of the samples to return an approximation of the true distribution that is significantly more accurate than the empirical distribution, without relying on any prior assumptions on the distribution. We present an instance optimal learning algorithm which optimally performs this de-noising for every distribution for which such a de-noising is possible. One curious implication of our techniques is an algorithm for accurately estimating the number of new domain elements that would be seen given a new larger sample, of size up to n*log n. (Extrapolation beyond this sample size is provable information theoretically impossible, without additional assumptions on the distribution. ) While these results are applicable generally, we highlight an adaptation of this general approach to some problems in genomics (e. g. quantifying the number of unobserved protein coding variants). The second problem we consider is the task of accurately estimating the eigenvalues of the covariance matrix of a (high dimensional real-valued) distribution–the “population spectrum”. (These eigenvalues contain basic information about the distribution, including the presence or lack of low-dimensional structure in the distribution and the applicability of many higher-level machine learning and multivariate statistical tools. ) As we show, even in the regime where the sample size is linear or sublinear in the dimensionality of the distribution, and hence the eigenvalues and eigenvectors of the empirical covariance matrix are misleading, accurate approximations to the true population spectrum are possible. The final problem we discuss is the problem of recovering a low-rank approximation to a matrix of probabilities P, given access to an observed matrix of “counts” obtained via independent samples from the distribution defined by P. This problem can be viewed as a generalization of “community detection”, and is relevant to several recent machine learning efforts, including the work on constructing “word embeddings”. This talk is based on four papers, which are joint works with Paul Valiant, with Paul Valiant and James Zou, with Weihao Kong, and with Qingqing Huang, Sham Kakade, and Weihao Kong.