Appears in collection : 2022 - T3 - WS3 - Measure-theoretic Approaches and Optimal Transportation in Statistics

Optimal transport (OT) has recently gained a lot of interest in machine learning. It is a natural tool to compare in a geometrically faithful way probability distributions. It finds applications in both supervised learning (using geometric loss functions) and unsupervised learning (to perform generative model fitting). OT is however plagued by several issues, and in particular:

(i) the curse of dimensionality, since it might require a number of samples which grows exponentially with the dimension,

(ii) sensitivity to outliers, since it prevents mass creation and destruction during the transport,

(iii) impossibility to transport between two disjoint spaces. In this talk, I will review several recent proposals to address these issues, and showcase how they work hand-in-hand to provide a comprehensive machine learning pipeline.

The three key ingredients are:

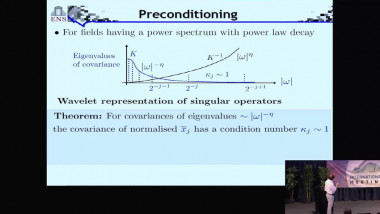

(i) entropic regularization which defines computationally efficient loss functions in high dimensions,

(ii) unbalanced OT, which relaxes the mass conservation to make OT robust to missing data and outliers,

(iii) the Gromov-Wasserstein formulation, introduced by Sturm and Memoli, which is a non-convex quadratic optimization problem defining transport between disjoint spaces.