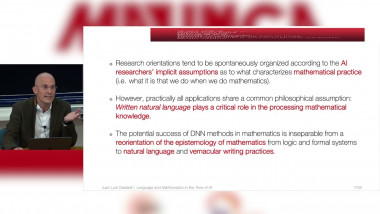

Language and Mathematics in the Time of AI. Philosophical and Theoretical Perspectives

By Juan Luis Gastaldi

Appears in collection : CEMRACS 2021: Data Assimilation and Model Reduction in High Dimensional Problems / CEMRACS 2021: Assimilation de données et réduction de modèle pour des problêmes en grande dimension

Recently a lot of progress has been made regarding the theoretical understanding of machine learning methods in particular deep learning. One of the very promising directions is the statistical approach, which interprets machine learning as a collection of statistical methods and builds on existing techniques in mathematical statistics to derive theoretical error bounds and to understand phenomena such as overparametrization. The lecture series surveys this field and describes future challenges.