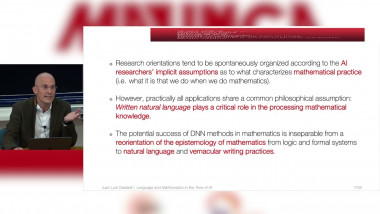

Language and Mathematics in the Time of AI. Philosophical and Theoretical Perspectives

By Juan Luis Gastaldi

Deciding What Game to Play, What Mathematics Problem to Solve

By Katie Collins

Appears in collection : Mathematics, Signal Processing and Learning / Mathématiques, traitement du signal et apprentissage

Since 2012, deep neural networks have led to outstanding results in many various applications, literally exceeding any previously existing methods, in texts, images, sounds, videos, graphs... They consist of a cascade of parametrized linear and non-linear operators whose parameters are optimized to achieve a fixed task. This talk addresses 4 aspects of deep learning through the lens of signal processing. First, we explain image classification in the context of supervised learning. Then, we show several empirical results that allow us to get some insights about the black box of neural networks. Third, we explain how neural networks create invariant representation: in the specific case of translation, it is possible to design predefined neural networks which are stable to translation, namely the Scattering Transform. Finally, we discuss several recent statistical learning, about the generalization and approximation properties of this deep machinery.