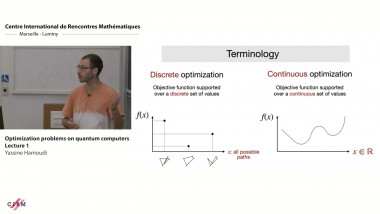

Appears in collection : Learning and Optimization in Luminy - LOL2022 / Apprentissage et Optimisation à Luminy - LOL2022

First-order non-convex optimization is at the heart of neural networks training. Recent analyses showed that the Polyak-Lojasiewicz condition is particularly well-suited to analyze the convergence of the training error for these architectures. In this short presentation, I will propose extensions of this condition that allows for more flexibility and application scenarios, and show how stochastic gradient descent converges under these conditions. Then, I will show how to use these conditions to prove the convergence of the test error for simple deep learning architectures in an online setting.