Statistics meets tensors: methods, theory, and applications

By Anru Zhang

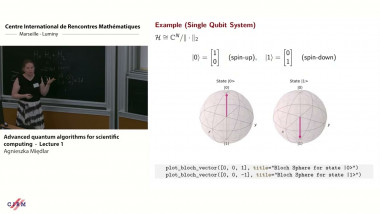

Advanced quantum algorithms for scientific computing - lecture 1

By Agnieszka Międlar

Appears in collections : International traveling workshop on interactions between low-complexity data models and sensing techniques / Colloque international et itinérant sur les interactions entre modèles de faible complexité et acquis, Ecoles de recherche

We consider the convergence of the iterative projected gradient (IPG) algorithm for arbitrary (typically nonconvex) sets and when both the gradient and projection oracles are only computed approximately. We consider different notions of approximation of which we show that the Progressive Fixed Precision (PFP) and (1+epsilon) optimal oracles can achieve the same accuracy as for the exact IPG algorithm. We also show that the former scheme is also able to maintain the (linear) rate of convergence of the exact algorithm, under the same embedding assumption, while the latter requires a stronger embedding condition, moderate compression ratios and typically exhibits slower convergence. We apply our results to accelerate solving a class of data driven compressed sensing problems, where we replace iterative exhaustive searches over large datasets by fast approximate nearest neighbour search strategies based on the cover tree data structure. Finally, if there is time we will give examples of this theory applied in practice for rapid enhanced solutions to an emerging MRI protocol called magnetic resonance fingerprinting for quantitative MRI.