Hybrid AI for Knowledge Representation and Model-based Image Understanding - Towards Explainability

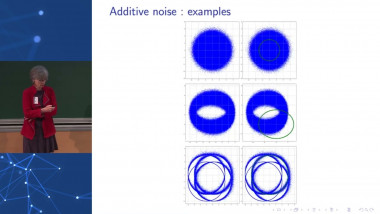

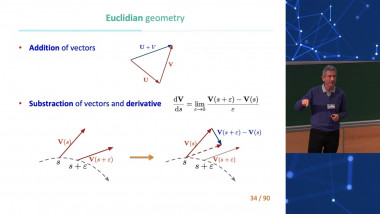

This presentation will focus on hybrid AI, as a step towards explainability, more specifically in the domain of spatial reasoning and image understanding. Image understanding benefits from the modeling of knowledge about both the scene observed and the objects it contains as well as their relationships. We show in this context the contribution of hybrid artificial intelligence, combining different types of formalisms and methods, and combining knowledge with data. Knowledge representation may rely on symbolic and qualitative approaches, as well as semi-qualitative ones to account for their imprecision or vagueness. Structural information can be modeled in several formalisms, such as graphs, ontologies, logical knowledge bases, or neural networks, on which reasoning will be based. Image understanding is then expressed as a problem of spatial reasoning. These approaches will be illustrated with examples in medical imaging, illustrating the usefulness of combining several approaches.