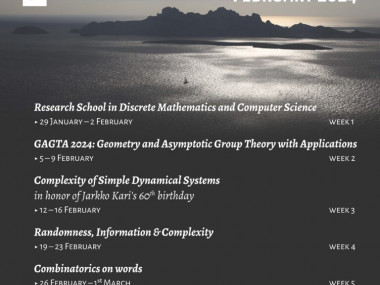

Collection Randomness, Information & Complexity / Aléatoire, information et complexité - Week 4

Various connections between information and computation theory are studied since many decades. A notion of algorithmic (Kolmogorov) complexity that uses computation theory to measure information in finite objects (instead of random variables) appeared in 1960s and became by now a standard tool. There are many examples of problems where information-theoretic tools like Shannon’s entropy were used to analyze complexity of algorithms and prove lower bounds. Now, several directions of research based on theses connections are currently active: communication and information complexity, complexity and dimensions, complexity vs compression, pseudo-random structures, complexity of computation and Kolmogorov complexity, and randomness and computability theory. The goal of this week is to gather experts from all of these directions, who share a common general view on randomness, information and complexity.

Appears in collection : THEMATIC MONTH - MOIS THÉMATIQUE : Discrete Mathematics & Computer Science: Groups, Dynamics, Complexity, Words / Mathématiques discrètes et informatique : mots, complexité, dynamique, groupes

Organizer(s) Bienvenu, Laurent ; Perifel, Sylvain ; Romashchenko, Andrei ; Shen, Alexander ; Theyssier, Guillaume

Date(s) 19/02/2024 - 23/02/2024

linked URL https://conferences.cirm-math.fr/3151.html