Contextual Bandit: from Theory to Applications

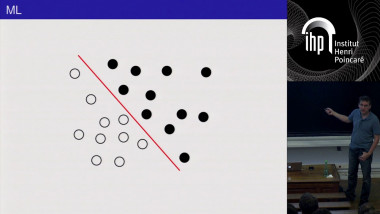

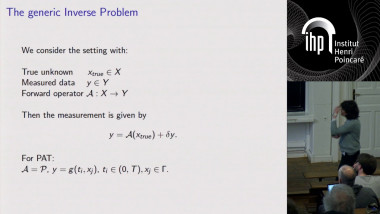

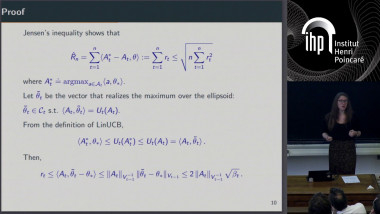

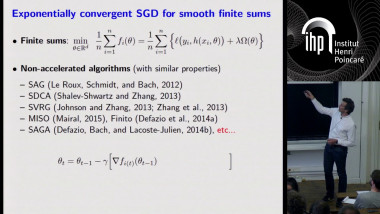

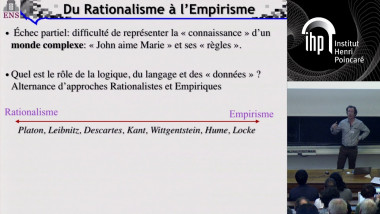

Trading exploration versus exploration is a key problem in computer science: it is about learning how to make decisions in order to optimize a long-term cost. While many areas of machine learning aim at estimating a hidden function given a dataset, reinforcement learning is rather about optimally building a dataset of observations of this hidden function that contains just enough information to guarantee that the maximum is being properly estimated. The first part of this talk reviews the main techniques and results known on the contextual linear bandit. We'll mostly rely on the recent book of Lattimore and Szepesvari (2019) [1]. Indeed, real-world problems often don't behave as the theory would like them to. In the second part of this talk, we want to share our experience in applying bandit algorithms in industry [2]. In particular, it appears that while the system is supposed to be interacting with its environment, the customers' feedback is often delayed or missing and does not allow to perform the necessary updates. We propose a solution to this issue, propose some alternative models and architecture, and finish the presentation with open questions on sequential learning beyond bandits.