Exploring the High-dimensional Random Landscapes of Data Science (3/3)

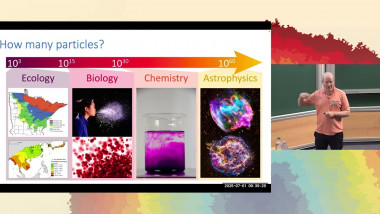

Machine learning and Data science algorithms involve in their last stage the need for optimization of complex random functions in very high dimensions. I will briefly survey how these landscapes can be topologically complex, whether this is important or not, and how the usual simple optimization algorithms like Stochastic Gradient Descent (SGD) perform in these difficult contexts. I will mostly rely on joint works with Reza Gheissari (Northwestern), Aukosh Jagganath (Waterloo), Jiaoyang Huang (Wharton).

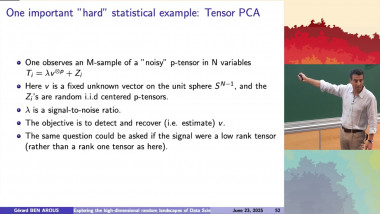

I will first introduce the whole framework for non-experts, from the structure of the typical tasks to the natural structures of neural nets used in standard contexts. l will then cover briefly the classical and usual context of SGD in finite dimensions. We will then concentrate first on a simple example, which happens to be close to statistical physics, i.e. the so-called Tensor PCA model, and show how it is related to spherical spin glasses. We will survey the topological properties of this model, and how simple algorithms perform in the basic estimation task of a single spike. We will then move on to the so-called single index models, and then to the notion of "effective dynamics” and “summary statistics”. These effective dynamics, when they exist, run in much smaller dimension and rule the performance of the algorithm. The next step will be to understand how the system finds these “summary statistics”. We will show how this is based on a dynamical spectral transition: along the trajectory of the optimization path, the Gram matrix or the Hessian matrix develop outliers which carry these effective dynamics. I will naturally first come back to the Random Matrix tools needed here (the behavior of the edge of the spectrum and the BBP transition) in a much broader context. We will illustrate this with a few examples from ML: multilayer neural nets for classification (of Gaussian mixtures), and the XOR examples, for instance, or from Statistics like the multi-spike Tensor PCA (from a recent joint work with Cedric Gerbelot (Courant) and Vanessa Piccolo (ENS Lyon)).